Abstract

Concurrent computations resemble conversations. In a conversation, participants direct utterances at others and, as the conversation evolves, exploit the known common context to advance the conversation. Similarly, collaborating software components share knowledge with each other in order to make progress as a group towards a common goal.

This dissertation studies concurrency from the perspective of cooperative knowledge-sharing, taking the conversational exchange of knowledge as a central concern in the design of concurrent programming languages. In doing so, it makes five contributions:

- It develops the idea of a common dataspace as a medium for knowledge exchange among concurrent components, enabling a new approach to concurrent programming.

While dataspaces loosely resemble both “fact spaces” from the world of Linda-style languages and Erlang's collaborative model, they significantly differ in many details.

- It offers the first crisp formulation of cooperative, conversational knowledge-exchange as a mathematical model.

- It describes two faithful implementations of the model for two quite different languages.

- It proposes a completely novel suite of linguistic constructs for organizing the internal structure of individual actors in a conversational setting.

The combination of dataspaces with these constructs is dubbed Syndicate.

- It presents and analyzes evidence suggesting that the proposed techniques and constructs combine to simplify concurrent programming.

The dataspace concept stands alone in its focus on representation and manipulation of conversational frames and conversational state and in its integral use of explicit epistemic knowledge. The design is particularly suited to integration of general-purpose I/O with otherwise-functional languages, but also applies to actor-like settings more generally.

Acknowledgments

Networking is interprocess communication.

—Robert Metcalfe, 1972, quoted in Day (2008)

I am deeply grateful to the many, many people who have supported, taught, and encouraged me over the past seven years.

My heartfelt thanks to my advisor, Matthias Felleisen. Matthias, it has been an absolute privilege to be your student. Without your patience, insight and willingness to let me get the crazy ideas out of my system, this work would not have been possible. My gratitude also to the members of my thesis committee, Mitch Wand, Sam Tobin-Hochstadt, and Jan Vitek. Sam in particular helped me convince Matthias that there might be something worth looking into in this concurrency business. I would also like to thank Olin Shivers for providing early guidance during my studies.

Thanks also to my friends and colleagues from the Programming Research Lab, including Claire Alvis, Leif Andersen, William Bowman, Dan Brown, Sam Caldwell, Stephen Chang, Ben Chung, Andrew Cobb, Ryan Culpepper, Christos Dimoulas, Carl Eastlund, Spencer Florence, Oli Flückiger, Dee Glaze, Ben Greenman, Brian LaChance, Ben Lerner, Paley Li, Max New, Jamie Perconti, Gabriel Scherer, Jonathan Schuster, Justin Slepak, Vincent St-Amour, Paul Stansifer, Stevie Strickland, Asumu Takikawa, Jesse Tov, and Aaron Turon. Sam Caldwell deserves particular thanks for being the second ever Syndicate programmer and for being willing to pick up the ideas of Syndicate and run with them.

Many thanks to Alex Warth and Yoshiki Ohshima, who invited me to intern at CDG Labs with a wonderful research group during summer and fall 2014, and to John Day, whose book helped motivate me to return to academia. Thanks also to the DARPA CRASH program and to several NSF grants that helped to fund my PhD research.

I wouldn't have made it here without crucial interventions over the past few decades from a wide range of people. Nigel Bree hooked me on Scheme in the early '90s, igniting a lifelong interest in functional programming. A decade later, while working at a company called LShift, my education as a computer scientist truly began when Matthias Radestock and Greg Meredith introduced me to the -calculus and many related ideas. Andy Wilson broadened my mind with music, philosophy and political ideas both new and old. A few years later, Alexis Richardson showed me the depth and importance of distributed systems as we developed new ideas about messaging middleware and programming languages while working together on RabbitMQ. My colleagues at LShift were instrumental to the development of the ideas that ultimately led to this work. My thanks to all of you. In particular, I owe an enormous debt of gratitude to my good friend Michael Bridgen. Michael, the discussions we have had over the years contributed to this work in so many ways that I'm still figuring some of them out.

Life in Boston wouldn't have been the same without the friendship and hospitality of Scott and Megs Stevens. Thank you both.

Finally, I'm grateful to my family. The depth of my feeling prevents me from adequately conveying quite how grateful I am. Thank you Mum, Dad, Karly, Casey, Sabrina, and Blyss. Each of you has made an essential contribution to the person I've become, and I love you all. Thank you to the Yates family and to Warren, Holden and Felix for much-needed distraction and moments of zen in the midst of the write-up. But most of all, thank you to Donna. You're my person.

Tony Garnock-Jones

Boston, Massachusetts

December 2017

Contents

- IBackground

- 1Introduction

- 2Philosophy and Overview of the Syndicate Design

- 2.1Cooperating by sharing knowledge

- 2.2Knowledge types and knowledge flow

- 2.3Unpredictability at run-time

- 2.4Unpredictability in the design process

- 2.5Syndicate's approach to concurrency

- 2.6Syndicate design principles

- 2.7On the name “Syndicate”

- 3Approaches to Coordination

- 3.1A concurrency design landscape

- 3.2Shared memory

- 3.3Message-passing

- 3.4Tuplespaces and databases

- 3.5The fact space model

- 3.6Surveying the landscape

- IITheory

- 4Computational Model I: The Dataspace Model

- 4.1Abstract dataspace model syntax and informal semantics

- 4.2Formal semantics of the dataspace model

- 4.3Cross-layer communication

- 4.4Messages versus assertions

- 4.5Properties

- 4.6Incremental assertion-set maintenance

- 4.7Programming with the incremental protocol

- 4.8Styles of interaction

- 5Computational Model II: Syndicate

- 5.1Abstract Syndicate/λ syntax and informal semantics

- 5.2Formal semantics of Syndicate/λ

- 5.3Interpretation of events

- 5.4Interfacing Syndicate/λ to the dataspace model

- 5.5Well-formedness and Errors

- 5.6Atomicity and isolation

- 5.7Derived forms: and

- 5.8Properties

- IIIPractice

- 6Syndicate/rkt Tutorial

- 6.1Installation and brief example

- 6.2The structure of a running program: ground dataspace, driver actors

- 6.3Expressions, values, mutability, and data types

- 6.4Core forms

- 6.5Derived and additional forms

- 6.6Ad-hoc assertions

- 7Implementation

- 7.1Representing Assertion Sets

- 7.1.1Background

- 7.1.2Semi-structured assertions & wildcards

- 7.1.3Assertion trie syntax

- 7.1.4Compiling patterns to tries

- 7.1.5Representing Syndicate data structures with assertion tries

- 7.1.6Searching

- 7.1.7Set operations

- 7.1.8Projection

- 7.1.9Iteration

- 7.1.10Implementation considerations

- 7.1.11Evaluation of assertion tries

- 7.1.12Work related to assertion tries

- 7.2Implementing the dataspace model

- 7.2.1Assertions

- 7.2.2Patches and multiplexors

- 7.2.3Processes and behavior functions

- 7.2.4Dataspaces

- 7.2.5Relays

- 7.3Implementing the full Syndicate design

- 7.3.1Runtime

- 7.3.2Syntax

- 7.3.3Dataflow

- 7.4Programming tools

- 7.4.1Sequence diagrams

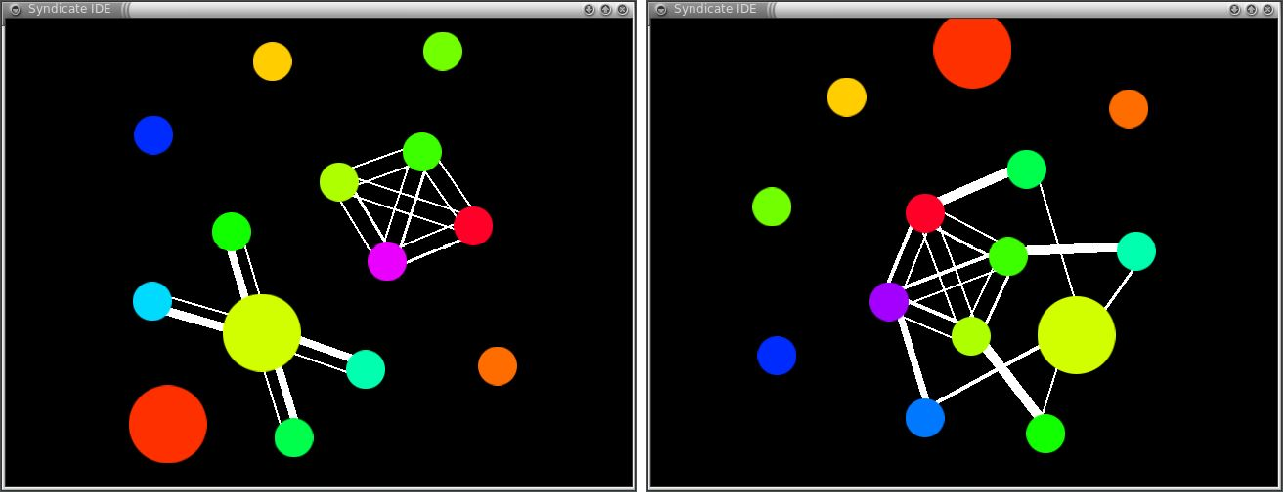

- 7.4.2Live program display

- 8Idiomatic Syndicate

- 8.1Protocols and Protocol Design

- 8.2Built-in protocols

- 8.3Shared, mutable state

- 8.4I/O, time, timers and timeouts

- 8.5Logic, deduction, databases, and elaboration

- 8.5.1Forward-chaining

- 8.5.2Backward-chaining and Hewitt's “Turing” Syllogism

- 8.5.3External knowledge sources: The file-system driver

- 8.5.4Procedural knowledge and Elaboration: “Make”

- 8.5.5Incremental truth-maintenance and Aggregation: All-pairs shortest paths

- 8.5.6Modal reasoning: Advertisement

- 8.6Dependency resolution and lazy startup: Service presence

- 8.7Transactions: RPC, Streams, Memoization

- 8.8Dataflow and reactive programming

- IVReflection

- 9Evaluation: Patterns

- 9.1Patterns

- 9.2Eliminating and simplifying patterns

- 9.3Simplification as key quality attribute

- 9.4Event broadcast, the observer pattern and state replication

- 9.5The state pattern

- 9.6The cancellation pattern

- 9.7The demand-matcher pattern

- 9.8Actor-language patterns

- 10Evaluation: Performance

- 10.1Reasoning about routing time and delivery time

- 10.2Measuring abstract Syndicate performance

- 10.3Concrete Syndicate performance

- 11Discussion

- 11.1Placing Syndicate on the map

- 11.2Placing Syndicate in a wider context

- 11.2.1Functional I/O

- 11.2.2Functional operating systems

- 11.2.3Process calculi

- 11.2.4Formal actor models

- 11.2.5Messaging middleware

- 11.3Limitations and challenges

- 12Conclusion

- 12.1Review

- 12.2Next steps

- ASyndicate/js Syntax

- BCase study: IRC server

- CPolyglot Syndicate

- DRacket Dataflow Library

IBackground

1Introduction

Concurrency and its constant companions, communication and coordination, are ubiquitous in computing. From warehouse-sized datacenters through multi-processor operating systems to interactive or multi-threaded programs, coroutines, and even the humble function, every computation exists in some context and must exchange information with that context in a prescribed manner at a prescribed time. Functions receive inputs from and transmit outputs to their callers; impure functions may access or update a mutable store; threads update shared memory and transfer control via locks; and network services send and receive messages to and from their peers.

Each of these acts of communication contributes to a shared understanding of the relevant knowledge required to undertake some task common to the involved parties. That is, the purpose of communication is to share state: to replicate information from peer to peer. After all, a communication that does not affect a receiver's view of the world literally has no effect. Put differently, each task shared by a group of components entails various acts of communication in the frame of an overall conversation, each of which conveys knowledge to components that need it. Each act of communication contributes to the overall conversational state involved in the shared task. Some of this conversational state relates to what must be or has been done; some relates to when it must be done. Traditionally, the “what” corresponds closely to “communication,” and the “when” to “coordination.”

The central challenge in programming for a concurrent world is the unpredictability of a component's interactions with its context. Pure, total functions are the only computations whose interactions are completely predictable: a single value in leads to a terminating computation which yields a single value out. Introduction of effects such as non-termination, exceptions, or mutability makes function output unpredictable. Broadening our perspective to coroutines makes even the inputs to a component unpredictable: an input may arrive at an unexpected time or may not arrive at all. Threads may observe shared memory in an unexpected state, or may manipulate locks in an unexpected order. Networks may corrupt, discard, duplicate, or reorder messages; network services may delegate tasks to third parties, transmit out-of-date information, or simply never reply to a request.

This seeming chaos is intrinsic: unpredictability is a defining characteristic of concurrency. To remove the one would eliminate the other. However, we shall not declare defeat. If we cannot eliminate harmful unpredictability, we may try to minimize it on one hand, and to cope with it on the other. We may seek a model of computation that helps programmers eliminate some forms of unpredictability and understand those that remain.

To this end, I have developed new programming language design, Syndicate, which rests on a new model of concurrent computation, the dataspace model. In this dissertation I will defend the thesis that

This claim must be broken down before it can be understood.

- Mechanism for sharing state.

- The dataspace model is, at heart, a mechanism for sharing state among neighboring concurrent components. The design focuses on mechanisms for sharing state because effective mechanisms for communication and coordination follow as special cases. Chapter 2 motivates the Syndicate design, and chapter 3 surveys a number of existing linguistic approaches to coordination and communication, outlining the multi-dimensional design space which results. Chapter 4 then presents a vocabulary for and formal model of dataspaces along with basic correctness theorems.

- Linguistic mechanism.

- The dataspace model, taken alone, explains communication and coordination among components but does not offer the programmer any assistance in structuring the internals of components. The full Syndicate design presents the primitives of the dataspace model to the programmer by way of new language constructs. These constructs extend the underlying programming language used to write a component, bridging between the language's own computational model and the style of interaction offered by the dataspace model. Chapter 5 presents these new constructs along with an example of their application to a simple programming language.

- Realizability.

- A design that cannot be implemented is useless; likewise an implementation that cannot be made performant enough to be fit-for-purpose. Chapter 6 examines an example of the integration of the Syndicate design with an existing host language. Chapter 7 discusses the key data structures, algorithms, and implementation techniques that allowed construction of the two Syndicate prototypes, Syndicate/rkt and Syndicate/js.

- Effectiveness.

- Chapter 8 argues informally for the effectiveness of the programming model by explaining idiomatic Syndicate style through dissection of example protocols and programs. Chapter 9 goes further, arguing that Syndicate eliminates various patterns prevalent in concurrent programming, thereby simplifying programming tasks. Chapter 10 discusses the performance of the Syndicate design, first in terms of the needs of the programmer and second in terms of the actual measured characteristics of the prototype implementations.

- Novelty.

- Chapter 11 places Syndicate within the map sketched in chapter 3, showing that it occupies a point in design space not covered by other models of concurrency.

Concurrency is ubiquitous in computing, from the very smallest scales to the very largest. This dissertation presents Syndicate as an approach to concurrency within a non-distributed program. However, the design has consequences that may be of use in broader settings such as distributed systems, network architecture, or even operating system design. Chapter 12 concludes the dissertation, sketching possible connections between Syndicate and these areas that may be examined more closely in future work.

2Philosophy and Overview of the Syndicate Design

Computer Scientists don't do philosophy.

—Mitch Wand

Taking seriously the idea that concurrency is fundamentally about knowledge-sharing has consequences for programming language design. In this chapter I will explore the ramifications of the idea and outline a mechanism for communication among and coordination of concurrent components that stems directly from it.

Concurrency demands special support from our programming languages. Often specific communication mechanisms like message-passing or shared memory are baked in to a language. Sometimes additional coordination mechanisms such as locks, condition variables, or transactions are provided; in other cases, such as in the actor model, the chosen communication mechanisms double as coordination mechanisms. In some situations, the provided coordination mechanisms are even disguised: the event handlers of browser-based JavaScript programs are carefully sequenced by the system, showing that even sequential programming languages exhibit internal concurrency and must face issues arising from the unpredictability of the outside world.

Let us step back from consideration of specific conversational mechanisms, and take a broader viewpoint. Seen from a distance, all these approaches to communication and coordination appear to be means to an end: namely, they are means by which relevant knowledge is shared among cooperating components. Knowledge-sharing is then simply the means by which they cooperate in performing their common task.

Focusing on knowledge-sharing allows us to ask high-level questions that are unavailable to us when we consider specific communication and coordination mechanisms alone:

- K1What does it mean to cooperate by sharing knowledge?

- K2What general sorts of facts do components know?

- K3What do they need to know to do their jobs?

It also allows us to frame the inherent unpredictability of concurrent systems in terms of knowledge. Unpredictability arises in many different ways. Components may crash, or suffer errors or exceptions during their operation. They may freeze, deadlock, enter unintentional infinite loops, or merely take an unreasonable length of time to reply. Their actions may interleave arbitrarily. New components may join and existing components may leave the group without warning. Connections to the outside world may fail. Demand for shared resources may wax and wane. Considering all these issues in terms of knowledge-sharing allows us to ask:

- K4Which forms of knowledge-sharing are robust in the face of such unpredictability?

- K5What knowledge helps the programmer mitigate such unpredictability?

Beyond the unpredictability of the operation of a concurrent system, the task the system is intended to perform can itself change in unpredictable ways. Unforeseen program change requests may arrive. New features may be invented, demanding new components, new knowledge, and new connections and relationships between existing components. Existing relationships between components may be altered. Again, our knowledge-sharing perspective allows us to raise the question:

- K6Which forms of knowledge-sharing are robust to and help mitigate the impact of changes in the goals of a program?

In the remainder of this chapter, I will examine these questions generally and will outline Syndicate's position on them in particular, concluding with an overview of the Syndicate approach to concurrency. We will revisit these questions in chapter 3 when we make a detailed examination of and comparison with other forms of knowledge-sharing embodied in various programming languages and systems.

2.1Cooperating by sharing knowledge

We have identified conversation among concurrent components abstractly as a mechanism for knowledge-sharing, which itself is the means by which components work together on a common task. However, taken alone, the mere exchange of knowledge is insufficient to judge whether an interaction is cooperative, neutral, or perhaps even malicious. As programmers, we will frequently wish to orchestrate multiple components, all of which are under our control, to cooperate with each other. From time to time, we must equip our programs with the means for responding to non-cooperative, possibly-malicious interactions with components that are not under our control. To achieve these goals, an understanding of what it is to be cooperative is required.

H. Paul Grice, a philosopher of language, proposed the cooperative principle of conversation in order to make sense of the meanings people derive from utterances they hear:

- Cooperative Principle (CP).

- Make your conversational contribution such as is required, at the stage at which it occurs, by the accepted purpose or direction of the talk exchange in which you are engaged. (Grice 1975)

- Quantity.

-

- Make your contribution as informative as required (for the current purposes of the exchange).

- Do not make your contribution more informative than is required.

- Quality.

- Try to make your contribution one that is true.

- Do not say what you believe to be false.

- Do not say that for which you lack adequate evidence.

- Relation.

- Be relevant.

- Manner.

- Be perspicuous.

- Avoid obscurity of expression.

- Avoid ambiguity.

- Be brief (avoid unnecessary prolixity).

- Be orderly.

He further proposed four conversational maxims as corollaries to the CP, presented in figure 1. It is important to note the character of these maxims:

They are not sociological generalizations about speech, nor they are moral prescriptions or proscriptions on what to say or communicate. Although Grice presented them in the form of guidelines for how to communicate successfully, I think they are better construed as presumptions about utterances, presumptions that we as listeners rely on and as speakers exploit. (Bach 2005)

Grice's principle and maxims can help us tackle question K1 in two ways. First, they can be read directly as constructive advice for designing conversational protocols for cooperative interchange of information. Second, they can attune us to particular families of design mistakes in such protocols that result from cases in which these “presumptions” are invalid. This can in turn help us come up with guidelines for protocol design that help us avoid such mistakes. Thus, we may use these maxims to judge a given protocol among concurrent components, asking ourselves whether each communication that a component makes lives up to the demands of each maxim.

Grice introduces various ways of failing to fulfill a maxim, and their consequences:

- Unostentatious violation of a maxim, which can mislead peers.

- Explicit opting-out of participation in a maxim or even the Cooperative Principle in general, making plain a deliberate lack of cooperation.

- Conflict between maxims: for example, there may be tension between speaking some necessary (Quantity(1)) truth (Quality(1)), and a lack of evidence in support of it (Quality(2)), which may lead to shaky conclusions down the line.

- Flouting of a maxim: blatant, obviously deliberate violation of a conversational maxim, which “exploits” the maxim, with the intent to force a hearer out of the usual frame of the conversation and into an analysis of some higher-order conversational context.

Many, but not all, of these can be connected to analogous features of computer communication protocols. In this dissertation, I am primarily assuming a setting involving components that deliberately aim to cooperate. We will not dwell on deliberate violation of conversational maxims. However, we will from time to time see that consideration of accidental violation of conversational maxims is relevant to the design and analysis of computer protocols. For example, Grice writes that

[the] second maxim [of Quantity] is disputable; it might be said that to be overinformative is not a transgression of the [Cooperative Principle] but merely a waste of time. However, it might be answered that such overinformativeness may be confusing in that it is liable to raise side issues; and there may also be an indirect effect, in that the hearers may be misled as a result of thinking that there is some particular point in the provision of the excess of information. (Grice 1975)

This directly connects to (perhaps accidental) excessive bandwidth use (“waste of time”) as well as programmer errors arising from exactly the misunderstanding that Grice describes.

It may seem surprising to bring ideas from philosophy of language to bear in the setting of cooperating concurrent computerized components. However, Grice himself makes the connection between his specific conversational maxims and “their analogues in the sphere of transactions that are not talk exchanges,” drawing on examples of shared tasks such as cooking and car repair, so it does not seem out of place to apply them to the design and analysis of our conversational computer protocols. This is particularly the case in light of Grice's ambition to explain the Cooperative Principle as “something that it is reasonable for us to follow, that we should not abandon.” (Grice 1975 p. 48; emphasis in original)

The CP makes mention of the “purpose or direction” of a given conversation. We may view the fulfillment of the task shared by the group of collaborating components as the purpose of the conversation. Each individual component in the group has its own role to play and, therefore, its own “personal” goals in working toward successful completion of the shared task. Kitcher (1990), writing in the context of the social structure of scientific collaboration, introduces the notions of personal and impersonal epistemic intention. We may adapt these ideas to our setting, explicitly drawing out the notion of a role within a conversational protocol. A cooperative component “wishes” for the group as a whole to succeed: this is its “impersonal” epistemic intention. It also has goals for itself, “personal” epistemic intentions, namely to successfully perform its roles within the group.

Finally, the CP is a specific example of the general idea of epistemic reasoning, logical reasoning incorporating knowledge and beliefs about one's own knowledge and beliefs, and about the knowledge and beliefs of other parties (Fagin et al. 2004; Hendricks and Symons 2015; van Ditmarsch, van der Hoek and Kooi 2017). However, epistemic reasoning has further applications in the design of conversational protocols among concurrent components, which brings us to our next topic.

2.2Knowledge types and knowledge flow

The conversational state that accumulates as part of a collaboration among components can be thought of as a collection of facts. First, there are those facts that define the frame of a conversation. These are exactly the facts that identify the task at hand; we label them “framing knowledge”, and taken together, they are the “conversational frame” for the conversation whose purpose is completion of a particular shared task. Just as tasks can be broken down into more finely-focused subtasks, so can conversations be broken down into sub-conversations. In these cases, part of the conversational state of an overarching interaction will describe a frame for each sub-conversation, within which corresponding sub-conversational state exists. The knowledge framing a conversation acts as a bridge between it and its wider context, defining its “purpose” in the sense of the CP. Figure 2 schematically depicts these relationships.

Some facts define conversational frames, but every shared fact is contextualized within some conversational frame. Within a frame, then, some facts will pertain directly to the task at hand. These, we label “domain knowledge”. Generally, such facts describe global aspects of the common problem that remain valid as we shift our perspective from participant to participant. Other facts describe the knowledge or beliefs of particular components. These, we label “epistemic knowledge”.

For example, as a file transfer progresses, the actual content of the file does not change: it remains a global fact that byte number 300 (say) has value 255, no matter whether the transfer has reached that position or not. The content of the file is thus “domain knowledge”. However, as the transfer proceeds and acknowledgements of receipt stream from the recipient to the transmitter, the transmitter's beliefs about the receiver's knowledge change. Each successive acknowledgement leads the transmitter to believe that the receiver has learned a little more of the file's content. Information on the progress of the transfer is thus “epistemic knowledge”.

If domain knowledge is “what is true in the world”, and epistemic knowledge is “who knows what”, the third piece of the puzzle is “who needs to know what” in order to effectively make a contribution to the shared task at hand. We will use the term “interests” as a name for those facts that describe knowledge that a component needs to learn. Knowledge of the various interests in a group allows collaborators to plan their communication acts according to the needs of individual components and the group as a whole. In conversations among people, interests are expressed as questions; in a computational setting, they are conveyed by requests, queries, or subscriptions.

The interests of components in a concurrent system thus direct the flow of knowledge within the system. The interests of a group may be constant, or may vary with time.

When interest is fixed, remaining the same for a certain class of shared task, the programmer can plan paths for communication up front. For example, in the context of a single TCP connection, the interests of the two parties involved are always the same: each peer wishes to learn what the other has to say. As a consequence, libraries implementing TCP can bake in the assumption that clients will wish to access received data. As another example, a programmer charged with implementing a request counter in a web server may choose to use a simple global integer variable, safe in the knowledge that the only possible item of interest is the current value of the counter.

A changing, dynamic set of interests, however, demands development of a vocabulary for communicating changes in interest during a conversation. For example, the query language of a SQL database is just such a vocabulary. The server's initial interest is in what the client is interested in, and is static, but the client's own interests vary with each request, and must be conveyed anew in the context of each separate interaction. Knowledge about dynamically-varying interests allows a group of collaborating components to change its interaction patterns on the fly.

With this ontology in hand, we may answer questions K2 and K3. Each task is delimited by a conversational frame. Within that frame, components share knowledge related to the domain of the task at hand, and knowledge related to the knowledge, beliefs, needs, and interests of the various participants in the collaborative group. Conversations are recursively structured by shared knowledge of (sub-)conversational frames, defined in terms of any or all of the types of knowledge we have discussed. Some conversations take place at different levels within a larger frame, bridging between tasks and their subtasks. Components are frequently engaged in multiple tasks, and thus often participate in multiple conversations at once. The knowledge a component needs to do its job is provided to it when it is created, or later supplied to it in response to its interests.

2.3Unpredictability at run-time

A full answer to question K4 must wait until the survey of communication and coordination mechanisms of chapter 3. However, this dissertation will show that at least one form of knowledge-sharing, the Syndicate design, encourages robust handling of many kinds of concurrency-related unpredictability.

The epistemological approach we have taken to questions K1–K3 suggests some initial steps toward an answer to question K5. In order for a program to be robust in the face of unpredictable events, it must first be able to detect these events, and second be able to muster an appropriate response to them. Certain kinds of events can be reliably detected and signaled, such as component crashes and exceptions, and arrivals and departures of components in the group. Others cannot easily be detected reliably, such as nontermination, excessive slowness, or certain kinds of deadlock and datalock. Half-measures such as use of timeouts must suffice for the latter sort. Still other kinds of unpredictability such as memory races or message races may be explicitly worked around via careful protocol design, perhaps including information tracking causality or provenance of a piece of knowledge or arranging for extra coordination to serialize certain sensitive operations.

No matter the source of the unpredictability, once detected it must be signaled to interested parties. Our epistemic, knowledge-sharing focus allows us to treat the facts of an unpredictable event as knowledge within the system. Often, such a fact will have an epistemic consequence. For example, learning that a component has crashed will allow us to discount any partial results we may have learned from it, and to discard any records we may have been keeping of the state of the failed component itself. Generally speaking, an epistemological perspective can help each component untangle intact from damaged or potentially untrustworthy pieces of knowledge. Having classified its records into “salvageable” and “unrecoverable”, it may discard items as necessary and engage with the remaining portion of the group in actions to repair the damage and continue toward the ultimate goal.

One particular strategy is to retry a failed action. Consideration of the roles involved in a shared task can help determine the scope of the action to retry. For example, the idea of supervision that features so prominently in Erlang programming (Armstrong 2003) is to restart entire failing components from a specification of their roles. Here, consideration of the epistemic intentions of components can be seen to help the programmer design a system robust to certain forms of unpredictable failure.

2.4Unpredictability in the design process

Programs are seldom “finished”. Change must be accommodated at every stage of a program's life cycle, from the earliest phases of development to, in many cases, long after a program is deployed. When concurrency is involved, such change often involves emendations to protocol definitions and shifts in the roles and relationships within a group of components. Just as with question K4, a full examination of question K6 must wait for chapter 3. However, approaching the question in the abstract, we may identify a few desirable characteristics of linguistic support for concurrent programming.

First, debugging of concurrent programs can be extremely difficult. A language should have tools for helping programmers gain insight into the intricacies of the interactions among each program's components. Such tools depend on information gleaned from the knowledge-sharing mechanism of the language. As such, a mechanism that generates trace information that matches the mental model of the programmer is desirable.

Second, changes to programs often introduce new interactions among existing components. A knowledge-sharing mechanism should allow for straightforward composition of pieces of program code describing (sub)conversations that a component is to engage in. It should be possible to introduce an existing component to a new conversation without heavy revision of the code implementing the conversations the component already supports.

Finally, service programs must often run for long periods of time without interruption. In cases where new features or important bug-fixes must be introduced, it is desirable to be able to replace or upgrade program components without interrupting service availability. Similar concerns arise even for user-facing graphical applications, where upgrades to program code must preserve various aspects of program state and configuration across the change.

2.5Syndicate's approach to concurrency

Syndicate places knowledge front and center in its design in the form of assertions. An assertion is a representation of an item of knowledge that one component wishes to communicate to another. Assertions may represent framing knowledge, domain knowledge, and epistemic knowledge, as a component sees fit. Each component in a group exists within a dataspace which both keeps track of the group's current set of assertions and schedules execution of its constituent components. Components add and remove assertions from the dataspace freely, and the dataspace ensures that components are kept informed of relevant assertions according to their declared interests.

In order to perform this task, Syndicate dataspaces place just one constraint on the interpretation of assertions: there must exist, in a dataspace implementation, a distinct piece of syntax for constructing assertions that will mean interest in some other assertion. For example, if “the color of the boat is blue” is an assertion, then so is “there exists some interest in the color of the boat being blue”. A component that asserts interest in a set of other assertions will be kept informed as members of that set appear and disappear in the dataspace through the actions of the component or its peers.

Syndicate makes extensive use of wildcards for generating large—in fact, often infinite—sets of assertions. For example, “interest in the color of the boat being anything at all” is a valid and useful set of assertions, generated from a piece of syntax with a wildcard marker in the position where a specific color would usually reside. Concretely, we might write , which generates the set of assertions , with ranging over the entire universe of assertions.

The design of the dataspace model thus far seems similar to the tuplespace model (Gelernter 1985; Gelernter and Carriero 1992; Carriero et al. 1994). There are two vital distinctions. The first is that tuples in the tuplespace model are “generative”, taking on independent existence once placed in the shared space, whereas assertions in the dataspace model are not. Assertions in a dataspace never outlive the component that is currently asserting them; when a component terminates, all its assertions are retracted from the shared space. This occurs whether termination was normal or the result of a crash or an exception. The second key difference is that multiple copies of a particular tuple may exist in a tuplespace, while redundant assertions in a dataspace cannot be distinguished by observers. If two components separately place an assertion into their common dataspace, a peer that has previously asserted interest in is informed merely that has been asserted, not how many times it has been asserted. If one redundant assertion of is subsequently withdrawn, the observer will not be notified; only when every assertion of is retracted is the observer notified that is no longer present in the dataspace. Observers are shown only a set view on an underlying bag of assertions. In other words, producing a tuple is non-idempotent, while making an assertion is idempotent.

Even more closely related is the fact space model (Mostinckx et al. 2007; Mostinckx, Lombide Carreton and De Meuter 2008), an approach to middleware for connecting programs in mobile networks. The model is based on an underlying tuplespace, interpreting tuples as logical facts by working around the generativity and poor fault-tolerance properties of the tuplespace mechanism in two ways. First, tuples are recorded alongside the identity of the program that produced them. This provenance information allows tuples to be removed when their producer crashes or is otherwise disconnected from the network. Second, tuples can be interpreted in an idempotent way by programs. This allows programs to ignore redundant tuples, recovering a set view from the bag of tuples they observe. While the motivations and foundations of the two works differ, in many ways the dataspace and fact space models address similar concerns. Conceptually, the dataspace model can be viewed as an adaptation and integration of the fact space model into a programming language setting. The fact space model focuses on scaling up to distributed systems, while our focus is instead on a mechanism that scales down to concurrency in the small. In addition, the dataspace model separates itself from the fact space model in its explicit, central epistemic constructions and its emphasis on conversational frames.

The dataspace model maintains a strict isolation between components in a dataspace, forcing all interactions between peers through the shared dataspace. Components access and update the dataspace solely via message passing. Shared memory in the sense of multi-threaded models is ruled out. In this way, the dataspace model seems similar to the actor model (Hewitt, Bishop and Steiger 1973; Agha 1986; Agha et al. 1997; De Koster et al. 2016). The core distinction between the models is that components in the dataspace model communicate indirectly by making and retracting assertions in the shared store which are observed by other components, while actors in the actor model communicate directly by exchange of messages which are addressed to other actors. Assertions in a dataspace are routed according to the intersection between sets of assertions and sets of asserted interests in assertions, while messages in the actor model are each routed to an explicitly-named target actor.

The similarities between the dataspace model and the actor, tuplespace, and fact space models are strong enough that we borrow terminology from them to describe concepts in Syndicate. Specifically, we borrow the term “actor” to denote a Syndicate component. What the actor model calls a “configuration” we fold into our idea of a “dataspace”, a term which also denotes the shared knowledge store common to a group of actors. The term “dataspace” itself was chosen to highlight this latter denotation, making a connection to fact spaces and tuplespaces.

We will touch again on the similarities and differences among these models in chapter 3, examining details in chapter 11. In the remainder of this subsection, let us consider Syndicate's relationship to questions K1–K6.

Cooperation, knowledge & conversation.

The Syndicate design takes questions K1–K3 to heart, placing them at the core of its choice of sharing mechanism and the concomitant approach to protocol design. Actors exchange knowledge encoded as assertions via a shared dataspace. All shared state in a Syndicate program is represented as assertions: this includes domain knowledge, epistemic knowledge, and frame knowledge. Key to Syndicate's functioning is the use of a special form of epistemic knowledge, namely assertions of interest. It is these assertions that drive knowledge flow in a program from parties asserting some fact to parties asserting interest in that fact.

Mey (2001) defines pragmatics as the subfield of linguistics which “studies the use of language in human communication as determined by the conditions of society”. Broadening its scope to include computer languages in software communication as determined by the conditions of the system as a whole takes us into a somewhat speculative area.Pragmatics is sometimes characterized as dealing with the effects of context [...] if one collectively refers to all the facts that can vary from utterance to utterance as ‘context.’ (Korta and Perry 2015)

Viewing an interaction among actors as a conversation and shared assertions as conversational state allows programmers to employ the linguistic tools discussed in section 2.1, taking steps toward a pragmatics of computer protocols. Syndicate encourages programmers to design conversational protocols directly in terms of roles and to map conversational contributions onto the assertion and retraction of assertions in the shared space. Grice's maxims offer high-level guidance for defining the meaning of each assertion: the maxims of quantity guide the design of the individual records included in each assertion; those of quality and relevance help determine the criteria for when an assertion should be made and when it should be retracted; and those of manner shape a vocabulary of primitive assertions with precisely-defined meanings that compose when simultaneously expressed to yield complex derived meanings.

Syndicate's assertions of interest determine the movement of knowledge in a system. They define, in effect, the set of facts an actor is “listening” for. All communication mechanisms must have some equivalent feature, used to route information from place to place. Unusually, however, Syndicate allows actors to react to these assertions of interest, in that assertions of interest are ordinary assertions like any other. Actors may act based on their knowledge of the way knowledge moves in a system by expressing interest in interest and deducing implicatures from the discovered facts. Mey (2001) defines a conversational implicature as “something which is implied in conversation, that is, something which is left implicit in actual language use.” Grice (1975) makes three statements helpful in pinning down the idea of conversational implicature: 1. “To assume the presence of a conversational implicature, we have to assume that at least the Cooperative Principle is being observed.” 2. “Conversational implicata are not part of the meaning of the expressions to the employment of which they attach.” This is what distinguishes implicature from implication. 3. “To calculate a conversational implicature is to calculate what has to be supposed in order to preserve the supposition that the Cooperative Principle is being observed.”

For example, imagine an actor responsible for answering questions about factorials. The assertion means that the factorial of is . If learns that some peer has asserted , which is to be interpreted as interest in the set of facts describing all potential answers to the question “what is the factorial of ?,” it can act on this knowledge to compute a suitable answer and can then assert in response. Once it learns that interest in the factorial of is no longer present in the group, it can retract its own assertion and release the corresponding storage resources. Knowledge of interest in a topic acts as a signal of demand for some resource: here, computation (directly) and storage (indirectly). The raw fact of the interest itself has the direct semantic meaning “please convey to me any assertions matching this pattern”, but has an indirect, unspoken, pragmatic meaning—an implicature—in our imagined protocol of “please compute the answer to this question.”

The idea of implicature finds use beyond assertions of interest. For example, the process of deducing an implicature may be used to reconstruct temporarily- or permanently-unavailable information “from context,” based on the underlying assumption that the parties involved are following the Cooperative Principle. For example, a message describing successful fulfillment of an order carries an implicature of the existence of the order. A hearer of the message may infer the order's existence on this basis. Similarly, a reply implicates the existence of a request.

Finally, the mechanism that Syndicate provides for conveying assertions from actor to actor via the dataspace allows reasoning about common knowledge (Fagin et al. 2004). An actor placing some assertion into the dataspace knows both that all interested peers will automatically learn of the assertion and that each such peer knows that all others will learn of the assertion. Providing this guarantee at the language level encourages the use of epistemic reasoning in protocol design while avoiding the risks of implementing the necessary state-management substrate by hand.

Run-time unpredictability.

Recall from section 2.3 that robust treatment of unpredictability requires that we must be able to either detect and respond to or forestall the occurrence of the various unpredictable situations inherent to concurrent programming. The dataspace model is the foundation of Syndicate's approach to questions K4 and K5, offering a means for signaling and detection of such events. However, by itself the dataspace model is not enough. The picture is completed with linguistic features for structuring state and control flow within each individual actor. These features allow programmers to concisely express appropriate responses to unexpected events. Finally, Syndicate's knowledge-based approach suggests techniques for protocol design which can help avoid certain forms of unpredictability by construction.

The dataspace model constrains the means by which Syndicate programs may communicate events within a group, including communication of unpredictable events. All communication must be expressed as changes in the set of assertions in the dataspace. Therefore, an obvious approach is to use assertions to express such ideas as demand for some service, membership of some group, presence in some context, availability of some resource, and so on. Actors expressing interest in such assertions will receive notifications as matching assertions come and go, including when they vanish unexpectedly. Combining this approach with the guarantee that the dataspace removes all assertions of a failing actor from the dataspace yields a form of exception propagation.

For example, consider a protocol where actors assert , where is a message for the user, in order to cause a user interface element to appear on the user's display. The actor responsible for reacting to such assertions, creating and destroying graphical user interface elements, will react to retraction of a assertion by removing the associated graphical element. The actor that asserts some may deliberately retract it when it is no longer relevant for the user. However, it may also crash. If it does, the dataspace model ensures that its assertions are all retracted. Since this includes the assertion, the actor managing the display learns automatically that its services are no longer required.

Another example may be seen in the example discussed above. The client asserting may “lose interest” before it receives an answer, or of course may crash unexpectedly. From the perspective of actor , the two situations are identical: is informed of the retraction, concludes that no interest in the factorial of remains, and may then choose to abandon the computation. The request implicated by assertion of is effectively canceled by retraction, whether this is caused by some active decision on the part of the requestor or is an automatic consequence of its unexpected failure.

The dataspace model thus offers a mechanism for using changes in assertions to express changes in demand for some resource, including both expected and unpredictable changes. Building on this mechanism, Syndicate offers linguistic tools for responding appropriately to such changes. Assertions describing a demand or a request act as framing knowledge and thus delimit a conversation about the specific demand or request concerned. For example, the presence of for each particular corresponds to one particular “topic of conversation”. Likewise, the assertion corresponds to a particular “call frame” invoking the services of actor . Actors need tools for describing such conversational frames, associating local conversational state, relevant event handlers, and any conversation-specific assertions that need to be made with each conversational frame created.

Syndicate introduces a language construct called a facet for this purpose. Each actor is composed of multiple facets; each facet represents a particular conversation that the actor is engaged in. A facet both scopes and specifies conversational responses to incoming events. Each facet includes private state variables related to the conversation concerned, as well as a bundle of assertions and event handlers. Each event handler has a pattern over assertions associated with it. Each of these patterns is translated into an assertion of interest and combined with the other assertions of the facet to form the overall contribution that the facet makes to the shared dataspace. An analogy to objects in object-oriented languages can be drawn. Like an object, a facet has private state. Its event handlers are akin to an object's methods. Unique to facets, though, is their contribution to the shared state in the dataspace: objects lack a means to automatically convey changes in their local state to interested peers.

Facets may be nested. This can be used to reflect nested sub-conversations via nested facets. When a containing facet is terminated, its contained facets are also terminated, and when an actor has no facets left, the actor itself terminates. Of course, if the actor crashes or is explicitly shut down, all its facets are removed along with it. These termination-related aspects correspond to the idea that a thread of conversation that logically depends on some overarching discussion context clearly becomes irrelevant when the broader discussion is abandoned.

The combination of Syndicate's facets and its assertion-centric approach to state replication yields a mechanism for robustly detecting and responding to certain kinds of unpredictable event. However, not all forms of unpredictability lend themselves to explicit modeling as shared assertions. For these, we require an alternative approach.

Consider unpredictable interleavings of events: for example, UDP datagrams may be reordered arbitrarily by the network. If some datagram can only be interpreted after datagram has been interpreted, a datagram receiver must arrange to buffer packets when they are received out of order, reconstructing an appropriate order to perform its task. The same applies to messages passed between actors in the actor model. The observation that datagram establishes necessary context for the subsequent message suggests an approach we may take in Syndicate. If instead of messages we model and as assertions, then we may write our program as follows:

- Express interest in . Wait until notified that has been asserted.

- Express interest in . Wait until notified that has been asserted.

- Process and as usual.

- Withdraw the previously-asserted interests in and .

This program will function correctly no matter whether is asserted before or vice versa. The structure of program reflects the observation that supplies a frame within which is to be understood by paying attention to only after having learned . Use of assertions instead of messages allows an interpreter of knowledge to decouple itself from the precise order of events in which knowledge is acquired and shared, concentrating instead on the logical dependency ordering among items of knowledge.

Finally, certain forms of unpredictability cannot be effectively detected or forestalled. For example, no system can distinguish nontermination from mere slowness in practice. In cases such as these, timeouts can be used in Syndicate just as in other languages. Modeling time as a protocol involving assertions in the dataspace allows us to smoothly incorporate time with other protocols, treating it as just like any other kind of knowledge about the world.

Unpredictability in the design process.

Section 2.4, expanding on question K6, introduced the challenges of debuggability, flexibility, and upgradeability. The dataspace model contributes to debuggability, while facets and hierarchical layering of dataspaces contribute to flexibility. While this dissertation does not offer more than a cursory investigation of upgradeability, the limited exploration of the topic so far completed does suggest that it could be smoothly integrated with the Syndicate design.

The dataspace model leads the programmer to reason about the group of collaborating actors as a whole in terms of two kinds of change: actions that alter the set of assertions in the dataspace, and events delivered to individual actors as a consequence of such actions. This suggests a natural tracing mechanism. There is nothing to the model other than events and actions, so capturing and displaying the sequence of actions and events not only accurately reflects the operation of a dataspace program, but directly connects to the programmer's mental model as well.

Facets can be seen as atomic units of interaction. They allow decomposition of an actor's relationships and conversations into small, self-contained pieces with well-defined boundaries. As the overall goals of the system change, its actors can be evolved to match by making alterations to groups of related facets in related actors. Altering, adding, or removing one facet while leaving others in an actor alone makes perfect sense.

The dataspace model is hierarchical. Each dataspace is modeled as a component in some wider context: as an actor in another, outer dataspace. This applies recursively. Certain assertions in the dataspace may be marked with a special constructor that causes them to be relayed to the next containing dataspace in the hierarchy, yielding cross-dataspace interaction. Peers in a particular dataspace are given no means of detecting whether their collaborators are simple actors or entire nested dataspaces with rich internal structure. This frees the program designer to decompose an actor into a nested dataspace with multiple contained actors, without affecting other actors in the system at large. This recursive, hierarchical (dis)aggregation of actors also contributes to the flexibility of a Syndicate program as time goes by and requirements change.

Code upgrade is a challenging problem for any system. Replacing a unit of code involves the old code marshaling its state into a bundle of information to be delivered to the new code. In other words, the actor involved sends a message to its “future self”. Systems like Erlang (Armstrong 2003) incorporate sophisticated language- and library-level mechanisms for supporting such code replacement. Syndicate shares with Erlang some common ideas from the actor model. The strong isolation between actors allows each to be treated separately when it comes to code replacement. Logically, each is running an independent codebase. By casting all interactions among actors in terms of a protocol, both Erlang and Syndicate offer the possibility of protocol-mediated upgrades and reboots affecting anything from a small part to the entirety of a running system.

2.6Syndicate design principles

In upcoming chapters, we will see concrete details of the Syndicate design and its implementation and use. Before we leave the high-level perspective on concurrency, however, a few words on general principles of the design of concurrent and distributed systems are in order. I have taken these guidelines as principles to be encouraged in Syndicate and in Syndicate programs. To be clear, they are my own conjectures about what makes good software. I developed them both through my experiences with early Syndicate prototypes and my experiences of development of large-scale commercial software in my career before beginning this project. In some cases, the guidelines influenced the Syndicate design, having an indirect but universal effect on Syndicate programs. In others, they form a set of background assumptions intended to directly shape the protocols designed by Syndicate programmers.

Exclude implementation concepts from domain ontologies.

When working with a Syndicate implementation, programmers must design conversational protocols that capture relevant aspects of the domain each program is intended to address. The most important overarching principle is that Syndicate programs and protocols should make their domain manifest, and hide implementation constructs. Generally, each domain will include an ontology of its own, relating to concepts largely internal to the domain. Such an ontology will seldom or never include concepts from the host language or even Syndicate-specific ideas.

Following this principle, Syndicate takes care to avoid polluting a programmer's domain models with implementation- and programming-language-level concepts. As far as possible, the structure and meaning of each assertion is left to the programmer. Syndicate implementations reserve the contents of a dataspace for domain-level concepts. Access to information in the domain of programs, relevant to debugging, tracing and otherwise reflecting on the operation of a running program, is offered by other (non-dataspace, non-assertion) means. This separation of domain from implementation mechanism manifests in several specific corollaries:

- Do not propagate host-language exception values across a dataspace.

An actor that raises an uncaught exception is terminated and removed from the dataspace, but the details of the exception (stack traces, error messages, error codes etc.) are not made available to peers via the dataspace. After all, exceptions describe some aspect of a running computer program, and do not in general relate to the program's domain.

17Syndicate distinguishes itself from Erlang here. Erlang's failure-signaling primitives, links and monitors, necessarily operate in terms of actor IDs, so it is no great step to include stack traces and error messages alongside an actor ID in a failure description record.Instead, a special reflective mechanism is made available for host-language programs to access such information for debugging and other similar purposes. Actors in a dataspace do not use this mechanism when operating normally. As a rule, they instead depend on domain-level signaling of failures in terms of the (automatic) removal of domain-level assertions on failure, and do not depend on host-language exceptions to signal domain-level exceptional situations.

- Make internal actor identifiers completely invisible.

The notion of a (programming-language) actor is almost never part of the application domain; this goes double for the notion of an actor's internal identifier (a.k.a. pointer, “pid”, or similar). Where identity of specific parties is relevant to a domain, Syndicate requires the protocol to explicitly specify and manage such identities, and they remain distinct from the internal identities of actors in a running Syndicate program. Again, during debugging, the identities of specific actors are relevant to the programmer, but this is because the programmer is operating in a different domain from that of the program under study.

Explicit treatment of identity unlocks two desirable abilities:

- One (implementation-level) actor can transparently perform multiple (domain-level) roles. Having decoupled implementation-level identity from domain-level information, we are free to choose arbitrary relations connecting them.

- One actor can transparently delegate portions of its responsibilities to others. Explicit management of identity allows actors to share a domain-level identity without needing to share an implementation-level identity. Peers interacting with such actors remain unaware of the particulars of any delegation being employed.

- Multicast communication should be the norm; point-to-point, a special case.

Conversational interactions can involve any number of participants. In languages where the implementation-provided medium of conversation always involves exactly two participants, programmers have to encode -party domain-level conversations using the two-party mechanism. Because of this, messages between components have to mention implementation-level conversation endpoints such as channel or actor IDs, polluting otherwise domain-specific ontologies with implementation-level constructs. In order to keep implementation ideas out of domain ontologies, Syndicate does not define any kind of value-level representation of a conversation. Instead, it leaves the choice of scheme for naming conversations up to the programmer.

- Equivalences on messages, assertions and other forms of shared state should be in terms of the domain, not in terms of implementation constructs.

For example, consider deduplication of received messages. In some protocols, in order to make message receipt idempotent, a table of previously-seen messages must be maintained. To decide membership of this table, a particular equivalence must be chosen. Forcing this equivalence to involve implementation-level constructs entails a need for the programmer to explicitly normalize messages to ensure that the implementation-level equivalence reflects the desired domain-level equivalence. To be even more specific:

- If a transport includes message sequence numbers, message identifiers, timestamps etc., then these items of information from the transport should not form part of the equivalence used.

- Sender identity should not form part of the equivalence used. If a particular protocol needs to know the identity of the sender of a message, it should explicitly include a definition of the relevant notion of identity (not necessarily the implementation-level identity of the sender) and explicitly include it in message type definitions.

Support resource management decisions.

Concurrent programs in all their forms rely on being able to scope the size and lifetime of allocations of internal resources made in response to external demand. “Demand” and “resource” are extremely general ideas. As a result, resource management decisions appear in many different guises, and give rise to a number of related principles:

- Demand-matching should be well-supported.

Demand-matching is the process of automatic allocation and release of some resource in response to detected need elsewhere in a program. The concept applies in many different places.

For example, in response to the demand of an incoming TCP connection, a server may allocate resources including a pair of memory buffers and a new thread. The buffers, combined with TCP back-pressure, give control over memory usage, and the thread gives control over compute resources as well as offering a convenient language construct to attach other kinds of resource-allocation and -release decisions to. When the connection closes, the server may terminate the thread, release other associated resources, and finalize its state.

Another example can be found in graphical user interfaces, where various widgets manifest in response to the needs of the program. An entry in a “buddy list” in a chat program may be added in response to presence of a contact, making the “demand” the presence of the contact and the “resource” the resulting list entry widget. When the contact disconnects, the “demand” for the “resource” vanishes, and the list entry widget should be removed.

- Service presence (Konieczny et al. 2009) and presence information generally should be well-supported.

Consider linking multiple independent services together to form a concurrent application. A web-server may depend on a database: it “demands” the services of the database, which acts as a “resource”. The web-server and database may in turn depend upon a logging service. Each service cannot start its work before its dependencies are ready: it observes the presence of its dependencies as part of its initialization.

Similarly, in a publish-subscribe system, it may be expensive to collect and broadcast a certain statistic. A publisher may use the availability of subscriber information to decide whether or not the statistic needs to be maintained. Consumers of the statistic act as “demand”, and the resource is the entirety of the activity of producing the statistic, along with the statistic itself. Presence of consumers is used to manage resource commitment.

Finally, the AMQP messaging middleware protocol (The AMQP Working Group 2008) includes special flags named “immediate” and “mandatory” on each published message. They cause a special “return to sender” feature to be activated, triggering a notification to the sender only when no receiver is present for the message at the time of its publication. This form of presence allows a sender to take alternative action in case no peer is available to attend to its urgent message.

Support direct communication of public aspects of component state.

This is a generalization of the notion of presence, which is just one portion of overall state.

Avoid dependence on timeouts.

In a distributed system, a failed component is indistinguishable from a slow one and from a network failure. Timeouts are a pragmatic solution to the problem in a distributed setting. Here, however, we have the luxury of a non-distributed design, and we may make use of specific forms of “demand” information or presence in order to communicate failure. Timeouts are still required for inter-operation with external systems, but are seldom needed as a normal part of greenfield Syndicate protocol design.

Reduce dependence on order-of-operations.

The language should be designed to make programs robust by default to reordering of signals. As part of this, idempotent signals should be the default where possible.

- Event-handlers should be written as if they were to be run in a (pseudo-) random order, even if a particular implementation does not rearrange them randomly. This is similar to the thinking behind the random event selection in CML's choice mechanism (Reppy 1992 page 131).

- Questions of deduplication, equivalence, and identity must be placed at the heart of each Syndicate protocol design, even if only at an abstract level.

Eschew transfer of higher-order data.

Mathematical and computational structures enjoy an enormous amount of freedom not available to structures that must be realized in the physical world. Similarly, patterns of interaction that can be realized in a non-distributed setting are often inappropriate, unworkable, or impossible to translate to a distributed setting. One example of this concerns higher-order data, by which I mean certain kinds of closure, mutable data structures, and any other stateful kind of entity.

Syndicate is not a distributed programming language, but was heavily inspired by my experience of distributed programming and by limitations of existing programming languages employed in a distributed setting. Furthermore, certain features of the design suggest that it may lead to a useful distributed programming model in future. With this in mind, certain principles relate to a form of physical realizability; chief among them, the idea of limiting information exchange to first-order data wherever possible. The language should encourage programmers to act as if transfer of higher-order data between peers in a dataspace were impossible. While non-distributed implementations of Syndicate can offer support for transfer of functions, objects containing mutable references, and so on, stepping to a distributed setting limits programs to exchange of first-order data only, since real physical communication networks are necessarily first-order. Transfer of higher-order data involves a hidden use/mention distinction. Higher-order data may be encoded, but cannot directly be transmitted.

With that said, however, notions of stateful location or place are important to certain domains, and the ontologies of such domains may well naturally include references to such domain-relevant location information. It is host-language higher-order data that Syndicate discourages, not domain-level references to location and located state.

Arrange actors hierarchically.

Many experiments in structuring groups of (actor model) actors have been performed over the past few decades. Some employ hierarchies of actors, that is, the overall system is structured as a tree, with each actor or group existing in exactly one group (e.g. Varela and Agha 1999). Others allow actors to be placed in more than one group at once, yielding a graph of actors (e.g. Callsen and Agha 1994).

Syndicate limits actor composition to tree-shaped hierarchies of actors, again inspired by physical realizability. Graph-like connectivity is encoded in terms of protocols layered atop the hierarchical medium provided. Recursive groupings of computational entities in real systems tend to be hierarchical: threads within processes within containers managed by a kernel running under a hypervisor on a core within a CPU within a machine in a datacenter.

2.7On the name “Syndicate”

Now that we have seen an outline of the Syndicate design, the following definitions may shed light on the choice of the name “Syndicate”:

A syndicate is a self-organizing group of individuals, companies, corporations or entities formed to transact some specific business, to pursue or promote a shared interest.

— Wikipedia

Syndicate, n.

1. A group of individuals or organizations combined to promote a common interest.

1.1 An association or agency supplying material simultaneously to a number of newspapers or periodicals.

Syndicate, v.tr.

...

1.1 Publish or broadcast (material) simultaneously in a number of newspapers, television stations, etc.

— Oxford Dictionary

An additional relevant observation is that a syndicate can be a group of companies, and a company can be a group of actors.

3Approaches to Coordination

Our analysis of communication and coordination so far has yielded a high-level, abstract view on concurrency, taking knowledge-sharing as the linchpin of cooperation among components. The previous chapter raised several questions, answering some in general terms, and leaving others for investigation in the context of specific mechanisms for sharing knowledge. In this chapter, we explore these remaining questions. To do so, we survey the paradigmatic approaches to communication and coordination. Our focus is on the needs of programmers and the operational issues that arise in concurrent programming. That is, we look at ways in which an approach helps or hinders achievement of a program's goals in a way that is robust to unpredictability and change.

3.1A concurrency design landscape

The outstanding questions from chapter 2 define a multi-dimensional landscape within which we place different approaches to concurrency. A given concurrency model can be assigned to a point in this landscape based on its properties as seen through the lens of these questions. Each point represents a particular set of trade-offs with respect to the needs of programmers.

To recap, the questions left for later discussion were:

- K4Which forms of knowledge-sharing are robust in the face of the unpredictability intrinsic to concurrency?

- K6Which forms of knowledge-sharing are robust to and help mitigate the impact of changes in the goals of a program?

In addition, the investigation of question K3 (“what do concurrent components need to know to do their jobs?”) concluded with a picture of domain knowledge, epistemic knowledge, framing knowledge, and knowledge flow within a group of components. However, it left unaddressed the question of mechanism, giving rise to a follow-up question:

In short, the three questions relate to robustness, operability and mechanism, respectively. The rest of the chapter is structured around an informal investigation of characteristics refining these categories.

- Mechanism (K3bis).

- A central characteristic of a given concurrency model is its mechanism for exchange of knowledge among program components. Each mechanism yields a different set of possibilities for how concurrent conversations evolve. First, a conversation may have arbitrarily many participants, and a participant may engage in multiple conversations at once. Hence, models and language designs must be examined as to Second, conversations come with associated state. Each participating component must find out about changes to this state and must integrate those changes with its local view. The component may also wish to change conversational state; such changes must be signaled to relevant peers. A mechanism can thus be analyzed in terms of

- Robustness (K4).

- Each concurrency model offers a different level of support to the programmer for addressing the unpredictability intrinsic to concurrent programming. Programs rely on the integrity of each participant's view of overall conversational state; this may entail consideration of consistency among different views of the shared state in the presence of unpredictable latency in change propagation. These lead to investigation of

- C5how a model helps maintain integrity of conversational state and

- C6how it helps ensure consistency of state as a program executes.

- Operability (K6).

- The notion of operability is broad, including attributes pertaining to the ease of working with the model at design, development, debugging and deployment time. We will focus on the ability of a model to support

Characteristics C1–C12 in figure 3 will act as a lens through which we will examine three broad families of concurrency: shared memory models, message-passing models, and tuplespaces and external databases. In addition, we will analyze the fact space model briefly mentioned in the previous chapter.

We illustrate our points throughout with a chat server that connects an arbitrary number of participants. It relays text typed by a user to all others and generates announcements about the arrival and departure of peers. A client may thus display a list of active users. The chat server involves chat-room state—the membership of the room—and demands many-to-many communication among the concurrent agents representing connected users. Each such agent receives events from two sources: its peers in the chat-room and the TCP connection to its user. If a user disconnects or a programming error causes a failure in the agent code, resources such as TCP sockets must be cleaned up correctly, and appropriate notifications must be sent to the remaining agents and users.

3.2Shared memory

Shared memory languages are those where threads communicate via modifications to shared memory, usually synchronized via constructs such as monitors (Gosling et al. 2014; IEEE 2009; ISO 2014). Figure 4 sketches the heart of a chat room implementation using a monitor (Brinch Hansen 1993) to protect the shared members variable.