History of Syndicate, 2012–

The ideas of Syndicated Actors and Dataspaces grew from my experience of building and supporting RabbitMQ, followed by my PhD research, post-doctoral research, and a lot of hacking and experimentation.

- 2010–2012 Racket-on-a-Router and os2.rkt

- 2012–2014 Marketplace, Network Calculus, and Minimart

- 2015–2017 Assertions, Dataspaces, Tries, Facets, and Syndicate

- 2018– Types, Security, Distribution, and System Syndicate

Bringing networking into our programming languages (and taking a PL approach to thinking about networks)

In 2008, while working on large-scale message broker federation, my colleagues and I discovered John Day’s book. Day quotes Bob Metcalfe’s memorable statement:

Networking is interprocess communication.1

Inspired by this, by our work on RabbitMQ, and by my own observations and experiments, I left industry for a few years in academia, studying toward a PhD in programming languages with Matthias Felleisen.

For my PhD work, I explored the converse of Metcalfe’s idea: whether interprocess communication might be networking. That is, whether the communication primitives we choose for our programming languages and programming models could be drawn more directly from our experience of computer networking.

The Syndicated Actor model, Dataspaces, and the Syndicate DSL are the result.

2010–2012 Racket-on-a-Router and os2.rkt

The first direction I followed was to use Racket to build a number of network-protocol-related services including a DNS server and recursive resolver and an SSH client/server protocol implementation.

The idea was that the lessons from these, combined with my previous Erlang and RabbitMQ experience, and ideas from Racket’s “big-bang” functional interactive graphics system, would let me distill a general model of a “functional operating system” suitable for writing communicating and interactive programs.

The result was

a small, pure-functional “operating system” for Racket called os2.rkt, based around an

actor behaviour type of roughly:

State × Event → State × [Action]

incorporating counterparty presence notifications alongside regular Actor-style message delivery.

Here we can already see the seeds of the generalized state-replication

mechanism of dataspaces in the specialized form of “topics”,

“publishers” and “subscribers” that can see each others’ presence as

well as send each other messages. All of the subsequent systems use

actor behaviour types morally equivalent to that of os2.rkt, as

well.

2012–2014 Marketplace, Network Calculus, and Minimart

It turned out that the specialized form of state-replication and counterparty presence notification wasn’t enough to elegantly capture programs like my DNS and SSH implementations.

Some programs were naturally expressed using not mere observation of

presence, but observation of observation of presence — that is,

programs that would react to the presence of programs that would react

to the presence of programs (!).2 As an

example, consider a program that wants the contents of the document at

a URL. It could subscribe to a topic (contents-of the-url). But

we can’t pre-create actors offering to publish on each possible

matching publisher topic of that form: there are an unbounded number

of possible URLs of interest.

(Interest in (interest in (interest in (interest in … in messages))))

The solution is to have an “HTTP client driver” express interest in

knowing about the existence of any subscribers that are in turn

interested in any topic matching a wildcard, (contents-of _).

The ideas in os2.rkt therefore evolved into a system called

Marketplace which had a

tower of levels of “subscription”.

-

At the lowest level, level zero, would be publishers and subscribers, exchanging messages.

-

At level one would be presence notifications describing the presence or absence of publishers and subscribers at level zero.

-

Level two would carry notifications describing the presence of peers at level one; and so on.

This introduced a new ability that features prominently in dataspace-based programming: the ability of a program to react to demand for some service or resource by producing supply of that service or resource.

An example of this can be seen in the TCP echo server example given in an ESOP 2014 paper I co-wrote with Sam Tobin-Hochstadt and Matthias Felleisen, “The Network as a Language Construct”, that came out of the work on Marketplace:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

#lang marketplace

(observe-publishers (tcp-channel ? (tcp-listener 5999) ?)

(match-conversation (tcp-channel from to _)

(on-presence (spawn (echoer from to)))))

(define (echoer from to)

(transition stateless

(publisher (tcp-channel to from ?))

(subscriber (tcp-channel from to ?)

(on-absence (quit))

(on-message

[(tcp-channel _ _ data)

(send-message (tcp-channel to from data))]))))

On line 3, we see interest at level 1 in the existence of publishers

on a TCP channel connected to local port 5999. When a connection

arrives, a matching publisher will appear, and the event handler on

line 5 will run, spawning a new actor whose behaviour is governed by

the routine echoer.

In that actor, line 11 reacts to absence of the matching publisher: that is, to the peer’s disconnection. Line 9 establishes a matching publisher for a byte stream flowing out to the connected peer, and line 14 sends messages on that topic whenever incoming data is received.

But how does the system know to create a TCP server socket on port 5999 to begin with?

The structure of a running program with driver actors

The answer is that the TCP “driver” actor, written in Marketplace and wrapping Racket’s imperative TCP socket routines, expresses interest at level 2, reacting to the presence of “subscribers” like that seen on line 3 of the example. When it sees that kind of level-1 interest appear, it allocates a real TCP server socket, listens to it, and spawns a “driver-side” actor for each incoming connection. That actor, in turn, creates the publisher that line 3 is waiting for.

Layering: nesting worlds within one another

Our ESOP 2014 paper introduced the Network Calculus (NC), a formal model of a cleaned-up version of what Marketplace was doing.

NC reifies the network (a “world” in the NC paper, a “dataspace” today) that connects a group of actors. Besides the benefits of explicitly studying the underpinning networks of Actor systems, it allows nesting of worlds within one another, forming a strictly-hierarchical tree of actors:

Layered File Server / Word Processor architecture

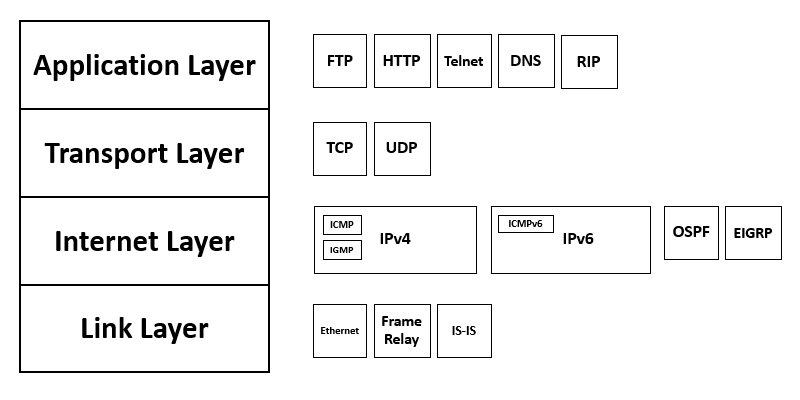

In the NC paper, we explored the hypothesis that such nesting corresponded to protocol layering, in the sense of the layers in the OSI model of networks.

Looking back at this hypothesis now, it’s clear to me that layering is important, but not primarily because it reflects protocol layering structures. Rather, its theoretical importance lies in the way it reflects physical containment and layering of computing and communication systems.3 It also has practical benefits: it serves well to isolate subsystems in a composed system. (Particularly when object-capabilities are added to the mix!)

One complete turn of the Research Cycle

Implementation experience, software prototyping, and abstraction of theory from praxis had led to a nice paper. The next step, closing the cycle, was to implement afresh, directly based on the theoretical model in the paper.

A new Racket language, minimart, was the result.

Minimart was, as it happens, a step back in terms of programmability. It did, however, lead to one extremely cool tech demo: a TCP/UDP/IP/ethernet stack. The demo offered glimpses of the possibilites and power inherent in the idea of providing a robust primitive for replicating state among actors in nontrivial programs.

Furthermore, Minimart was the first dataspace-like design to be implemented in a language other than Racket: js-marketplace brought Minimart to the browser. A websockets-and-JSON-based protocol allowed extension of Minimart presence, subscriptions, and messages across the network, exposing a system composed of a Racket server and multiple browser/JavaScript clients to the programmer as a single tree of Minimart actors.

Diagram showing physical and logical interconnection in a Browser/Server polyglot syndicated actor system, taken from Appendix C of my dissertation.

Despite the ergonomic problems of programming with Minimart, I felt that I was making good progress. There were four areas where I felt the system needed improvement:

-

Generality. Having presence in the system was great, but there were cases where I wanted to share state that didn’t map well onto a simple presence mechanism.

-

Efficiency. I needed a better way to index all the facts built up in the actor system. The Minimart implementation took O(n) time to propagate an event when n subscriptions existed in a program, even when only O(1) of those subscriptions was interested in the event.

-

Programmability. The pure-functional “reactor function” approach, with monolithic actor behaviours and explicit treatment of events and actions as values, wasn’t scaling well.

-

Composability, for want of a better term. While whole worlds (what I would later call “dataspaces”) could be nested in NC and Minimart, the way subscriptions and messages propagated across layers in the tree of actors didn’t seem very clean.

The next step of the research addressed these points, starting with generality and efficiency.

2015–2017 Assertions, Dataspaces, Tries, Facets, and Syndicate

Generality

A breakthrough came when I realized that presence information was just one kind of shared state to be replicated among interested parties. Generalizing from presence to what I started calling assertions—data items representing facts about some shared context—led to a rethink of the idea of “worlds” from NC to the first kind of “dataspace” in what was becoming a language called Syndicate.

Efficiency

I was sure there was some way to index over assertions so that routing of events within a dataspace could be done more efficiently than O(n). After a couple of false starts,4 I found the idea of visibly-pushdown languages (“VPLs”; Alur and Madhusudan 2009),5 which gave me what I needed.

A special kind of search trie could be used to represent whole sets of assertions. And because the language of assertions was itself visibly-pushdown, and VPLs are closed under union, intersection and negation6—exactly what I was using to compute overlap of interests!—I knew that my tries would also be closed under union, intersection and negation.

Here’s an example of a trie used for routing assertions in a dataspace, taken from the slides of my dissertation talk:

A trie representing a set of (Assertion × Actor)

The first version of this data structure was published in an ESOP 2016 paper that I co-wrote with Matthias Felleisen, “Coordinated Concurrent Programming in Syndicate”.

I flubbed the presentation of routing tries in the paper; a better (i.e. correct) presentation, including some optimizations and improvements that came subsequent to the paper, appears in the section on representing assertion sets in chapter 7 of my dissertation.

A (limited) theoretical and experimental evaluation of the performance characteristics of the data structure in use within a dataspace (in brief: reasonable (roughly O(log n)); comprehensible) appears in chapter 10 of my dissertation.

Programmability

It took me a while to realize that I had a new metaphor for programming communicating systems in mind, and that it deserved to be drawn out and written up.

A computational model

The idea of conversational concurrency (drawing on Gricean cooperativity, and elaborated on elsewhere on this website and in chapter 2 of my dissertation) gave names and meanings to the constructs I had in my mind that I struggled to express in the pure-functional approach embodied in Minimart.

Components, tasks, and conversational structure

A domain-specific syntax; a programming model

Reflecting these ideas into language constructs yielded a first, pure-functional version of Syndicate DSL syntax (also elaborated upon elsewhere on this website), which I published in “From Events to Reactions: A Progress Report” at PLACES 2016:

1

2

3

4

5

6

7

8

;; Syndicate DSL syntax "version 2016"

(actor (forever #:collect [(current-value 0)]

(assert (box-state current-value))

(on (message (set-box $new-value))

new-value)))

(actor (forever (on (asserted (box-state $v))

(send! (set-box (+ v 1))))))

Each Actor became internally structured as a tree of nested facets,

each representing some (sub-)conversation that the actor is engaged

in. Facets had endpoints representing individual subscriptions to

shared state or messages, and assertions publishing internal state

as shared state in the surrounding dataspace. Each facet’s internal

state was held in fields (the #:collect clause on line 2 above)

modelled by immutable variables. Fields were read by ordinary variable

reference (line 3), and every received event finished by yielding

zero, one, or more values (e.g. one, on line 5; zero, after the

send! on line 8) used to re-establish the facet (“forever”, line

2), with the new values placed in the facet’s fields for its next

incarnation. (If this is confusing, please take a look at

the PLACES paper.)

The initial Syndicate syntax was unsatisfactory: its pure-functional nature was too limiting for cross-facet, intra-actor interaction.

Shortly after PLACES 2016, I revised the syntax to be imperative instead, with intra-actor implicit dataflow connecting changes in local state to re-publications of a facet’s assertions:

1

2

3

4

5

6

7

8

;; Syndicate DSL syntax "version 2017"

(spawn (field [current-value 0])

(assert (box-state (current-value)))

(on (message (set-box $new-value))

(current-value new-value)))

(spawn (on (asserted (box-state $v))

(send! (set-box (+ v 1)))))

The program is almost the same, except for the way fields work. Facets are now no longer “re-established” after every event; instead, fields are declared as dataflow variables (line 2), read via procedure call (line 3), which marks them as “observed”, and written via procedure call (line 5), which marks them as “dirty”. Any assertion placed into the dataspace depending on an observed, dirty variable is republished after an event handler finishes running.

The revised syntax was published in the section on Syndicate DSL syntax in chapter 6 of my dissertation, and the syntax of current implementations is documented here.

Reflection on a running system

One lovely side effect of having a formal operational semantics for the Syndicated Actor model is that some great visualizations of running programs fall straight out, for free! For example, there’s a section on sequence diagrams in chapter 7 of my dissertation:

A sequence diagram produced from a recorded trace

These are nothing more than (completely automated!) renderings of recorded traces of actions and events in a running Syndicate program. They exactly correspond to the events delivered (and the code running as a result) during the program’s run. The connections among actors, the actions they perform, and the events their peers receive as a result are all drawn out by considering the formal-semantics-level meaning of each action.

A second complete turn of the Research Cycle

Having worked out the initial theory of syndicated actors, I produced two interworking implementations: Syndicate/rkt and Syndicate/js, both held in what was the main syndicate repository at the time.

I built a number of small and large programs in order to gain experience with the new design.

- A few larger Racket and Racket/JavaScript programs

- Many smaller Racket examples

- A few medium-sized graphical Racket programs;

- Several JavaScript examples, large and small (see also the writeup of the JS examples, with links to code and in-browser runnable demos).

Here are a few good ones:

-

A windowing system, widget library, and window manager. Everything on the screen is an assertion.

-

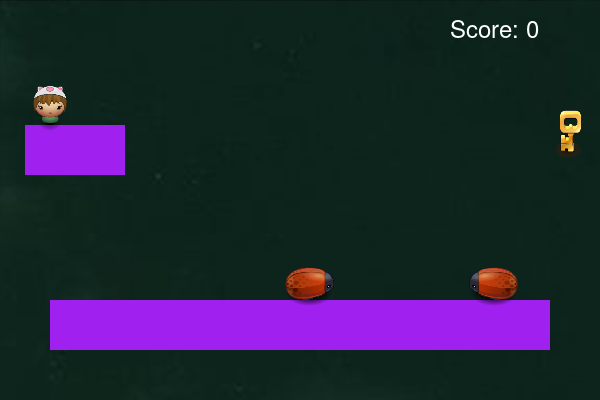

A 2D platform game. Everything on the screen is an assertion.

-

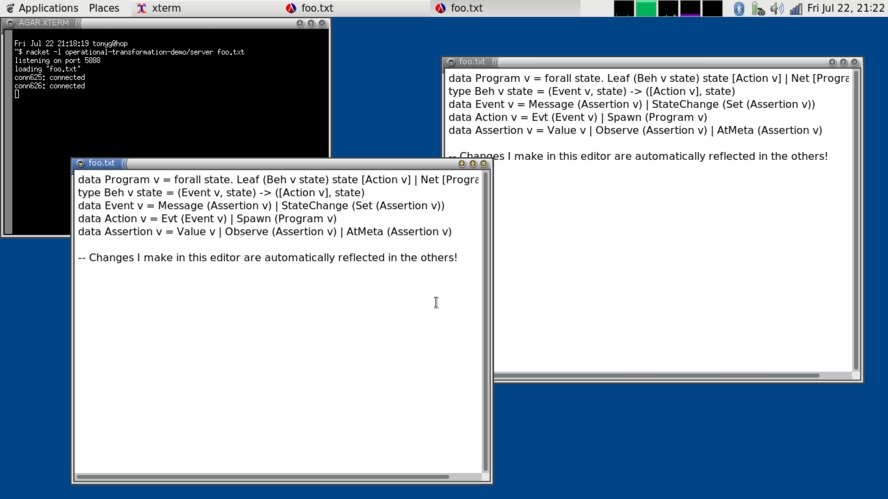

A collaborative GUI text editor using Operational Transformations.

-

A TCP/IP stack (ported from the earlier minimart-netstack to Syndicate).

Image credit: Michel Bakni, Wikimedia Commons -

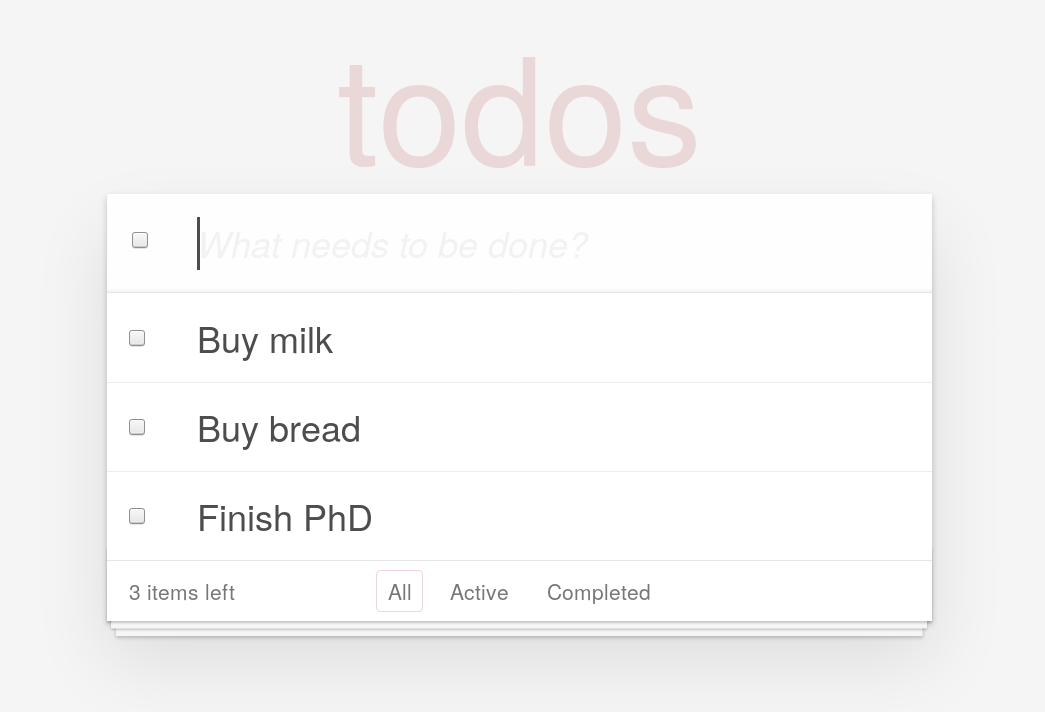

An implementation of a standard challenge problem for JavaScript frameworks, TodoMVC.

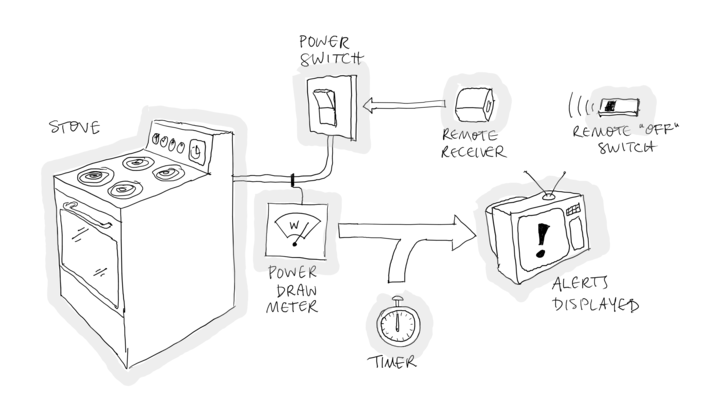

-

An Internet-of-Things scenario involving a “Smart Home”.

Composability

The NC concept of “meta-levels” for addressing successively outerward layers of containing dataspace never quite sat right, even in Marketplace and Minimart, but especially in Syndicate with its assertions and dataspaces.

Why should some kinds of routing information (meta-level number) be privileged in the syntax, while all other kinds of routing are to be done based on the structure of each assertion placed in a dataspace?

So I altered the system to include new kinds of assertion constructor:

1

2

3

4

5

(observe <some-topic>) ;; Subscribe to <some-topic> *here*, in this dataspace

(observe (inbound <some-topic>)) ;; Subscribe to <some-topic> in the containing dataspace

<some-topic> ;; Assert <some-topic> *here*, in this dataspace

(outbound <some-topic>) ;; Assert <some-topic> in the containing dataspace

Each assertion expressing interest in some inbound assertion set

automatically entailed another assertion:

(observe (inbound <some-topic>>)) ⇒ (outbound (observe <some-topic>))

A “relay” tracked inbound/outbound assertions within a contained

dataspace, transferring matching assertions to and from the containing

dataspace.

The story of relaying of assertions between nested dataspaces still seemed a bit awkward. What was that special “relay” doing there? Could it be generalized? How did the built-in kind of dataspace relay relate to other kinds of (user-implemented) relay, for example relays connecting client (e.g. in-browser) and server dataspaces across some network link? They appeared to be strongly similar, so what made the build-in dataspace relay so special? Could the notion of relay be generalized to make all kinds of relay consistent, and all user-implemented, with no special-casing for nested dataspaces at all?

.

.

.

Well, I didn’t have time to address that question. It was left for future work in my dissertation. Finally, in December 2017, I graduated!7 🎉🎉🎉

2018– Types, Security, Distribution, and System Syndicate

Sam Caldwell’s work: Types

My colleague Sam Caldwell (see also his github page) has been working on types for dataspaces. His first results were published in the Journal of Functional Programming in 2020, in a paper he wrote with Matthias Felleisen and me called “Typed Dataspace Actors”.

I’ve been continuing to profitably ignore the thorny question of types, focussing instead on operational, scaling and implementation questions.

Postdoc: Simplification, Speedup, and Federation

During 2018 and 2019, I was privileged to be able to work as a post-doctoral researcher with Jeremy Singer at the University of Glasgow on the FRμIT project. A special Alpine-derived distribution of Linux, FruitOS, harnesses hundreds or thousands of Raspberry Pi computers together into a large compute cluster. The result is cloud-like, but with fragments of the cloud executing in people’s homes or offices, or even out in the field gathering sensor data or performing other Internet-of-Things-type tasks. The management layer brings together the individual nodes, whereever they happen to be.

During this time, I hit upon a new implementation technique for dataspaces, replacing the pure-functional approach of Syndicate/rkt with a new imperative design. The new technique (hopefully to make its way into a publishable paper someday) involved a new dataspace index structure, inspired by the tries from my dissertation but simpler, faster and more flexible.

The new approach not only simplified the implementation significantly, it sped it up by a factor of about 30 (!!). The changes led to improvements in the TCP/IP stack implementation as well as many other example programs, small and large.

The new design also helped shed light on the tricky issue of relaying I mentioned earlier, bringing the overall syndicated-actor concept closer to more traditional actor models. This is great, because it will help make clear the points of difference between syndicated actors and other Actor-like systems.

Another line of research I followed during my post-doctoral time was to experiment with placing a Syndicate broker on each node in a FRμIT cluster. I designed a federation protocol (JavaScript; Racket) that allowed nodes to self-assemble into a (strict) tree overlay dataspace that management and user programs running on each node could access.

Docker containers, virtual machines, Raspberry Pi nodes, and users’ own devices and machines were all able to join the federation tree. Docker container nodes were subordinate to the node running on their host; nodes within a LAN elected a peer to be the supernode representing the whole group to the portion of the tree outside the LAN.

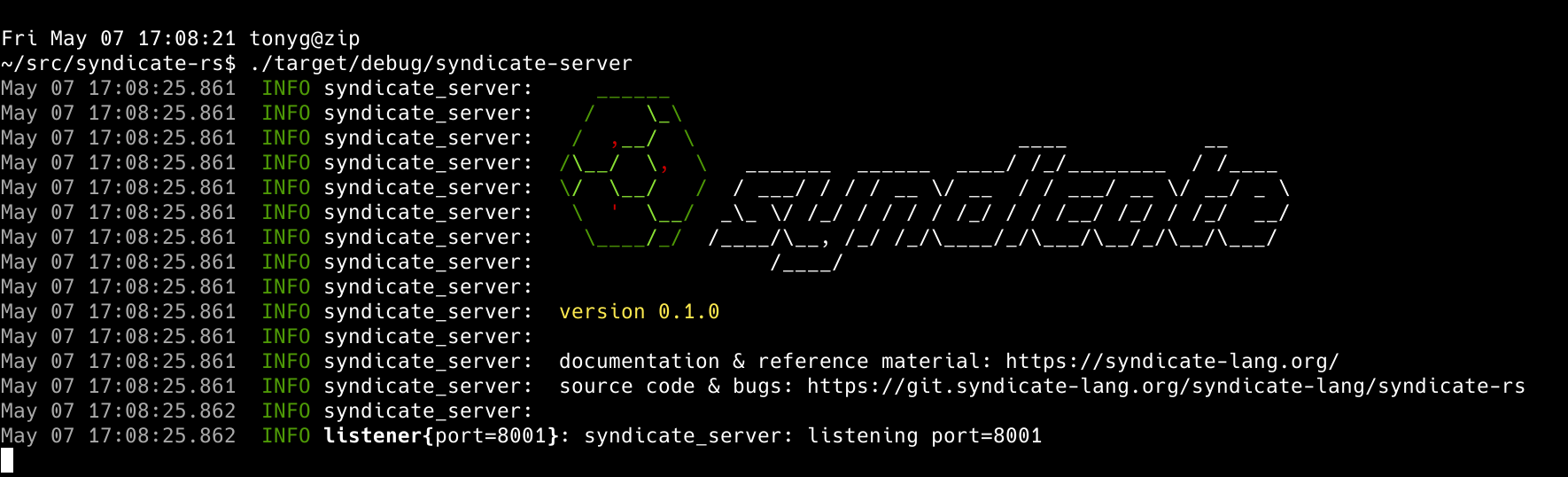

Screenshot of syndicate-rs broker running

Screenshot of syndicate-rs broker running

Because the new implementation technique was so fast, and the compute power of Raspberry Pi nodes so limited, I decided to explore implementing a Syndicate broker in Rust.8

The third turn of the Research Cycle

My postdoc came to its end in mid-2019. That feels to me like the end of the second research cycle. I guess they last about five years each?

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

Experience -----> Implementation <----> Theory

^ /

+----------------------------+

-2010 Erlang, RabbitMQ etc.

2011 racket-dns

2011 racket-ssh

2011 os2.rkt

2013 marketplace

2014 Network Calculus

2014 minimart

2014 minimart-netstack

2014 js-marketplace

- - -

2015 --- Assertions

2015 | Routing

2015 Syndicate/rkt

2016 | Facets

2017 ---

2017 Syndicate/js

2017 Many examples

2018 Imperative Syndicate

2019 FRμIT

- - -

2020 preserves Preserves + Schemas

2020 syndicate-rs

2021 --- Capabilities

2021 | Locations

2021 | Relays

2021 "novy-syndicate"

2021 Cellphone project ⋮ System Layer

In 2020, I did a lot of work on specification and implementation of the Preserves data language, which acts as a foundation supporting many different parts of the dataspace and Syndicate designs. In particular, work on a Rust implementation, needed for major improvements in Syndicate/rs, helped identify and straighten out a few problems with earlier Preserves spec drafts.

Using VNC to develop system code in Smalltalk on the phone itself

Later in the year, I started working on Squeak-on-a-cellphone, the beginnings of a project to explore dataspaces at the system layer (about which more below).

I also started work on Syndicate/java, getting back in touch with the Java language after several years of letting my skills get rusty.9 So far, I’ve limited myself to implementing a straightforward hybrid of an Erlang-style (with links and monitors) and E-style (with references to objects within a vat) Actor system, not including dataspace or Syndicate primitives.

It’s quite interesting all by itself: it’s very fast and able to use all the cores in a machine very effectively (~40 million messages/sec across 48 cores; ~3 million messages/sec on one core; see the Syndicate/java README for more information!). Next steps will be to upgrade it to support Syndicated Actor model primitive operations.

This year (2021), I’ve continued to work on Preserves. I rewrote the

JavaScript implementation in TypeScript, which led to a much improved

Syndicate/js TypeScript rewrite, including a tsserver plugin for

Syndicate DSL IDE support. See the

“typescript1”

branch of Syndicate/js (though that’s no longer the head of active

development).

I’ve also started working seriously on schemas for Preserves, allowing a schema compiler to do the heavy lifting of mapping Preserves documents to host-language data types. It isn’t written up yet, but you can get a taste by looking at the meta-schema and reading the section on Preserves on the “About” page.

The thing I’m most excited about, though, is that I finally managed to solve a long-standing design riddle, hinted at in the section on security properties in chapter 11 of my dissertation: what is a good way to secure access to syndicated-actor programs and systems? The answer I’ve come up with is a generalization of the powerful idea of object-capabilities, using Macaroons as a kind of capability for securing access to dataspaces and other objects in a distributed syndicated-actor system. So far, so good; a new sketch of a capability-securable implementation looks very promising (not to mention a little faster than previous implementations!).

I’m delighted to be able to say that the next phase of work on this project has been selected for a generous NLnet Foundation grant! The funded project is investigating the following question as part of the NGI Zero PET programme:

Could dataspaces be a suitable system layer foundation, perhaps replacing software like systemd and D-Bus?

The project involves placing dataspaces at the heart of a syndicated-actor system layer implementation, initially running on a cellphone using some of the code and ideas from last year’s Squeak-on-a-cellphone work.

Please see the system-layer project page and the Syndicate blog for the latest news.

-

Robert Metcalfe, 1972, quoted in Day (2008). ↩

-

We wracked our brains trying to think of practical uses of even higher levels in the tower, but the highest we ever practically got was level 3: interest in interest in interest in messages on a given topic. Allocating a TCP server socket, described here on this page, is an example of this. ↩

-

And, actually, I think there’s more thinking, experimentation and writing to be done on layering in various contexts and its relationship to nested dataspaces. More future work! ↩

-

My initial thinking involved anti-unification (set union), since I’d been thinking of the intersection of a message with a subscriber’s topic as a kind of unification (set intersection). ↩

-

Alur, Rajeev and P. Madhusudan. 2009. “Adding nesting structure to words.” Journal of the ACM 56(3)16:1–16:43. https://dx.doi.org/10.1145/1516512.1516518 ↩

-

VPLs generally have really great closure properties. See Alur and Madhusudan 2009, §3.5. ↩

-

Garnock-Jones, Tony. “Conversational Concurrency.” PhD, Northeastern University, 2017. ↩

-

The resulting software is looking really promising: a single, statically-linked binary, that can be dropped onto individual machines (including Raspberry Pi machines), and that can relay ~800,000 messages per second (on a reasonably powerful x86_64 machine) and ~500,000 shared state changes per second (on the same machine. ↩

-

Pun unintentional, though welcome. ↩